0 saved

0 saved

33.5K views

33.5K views

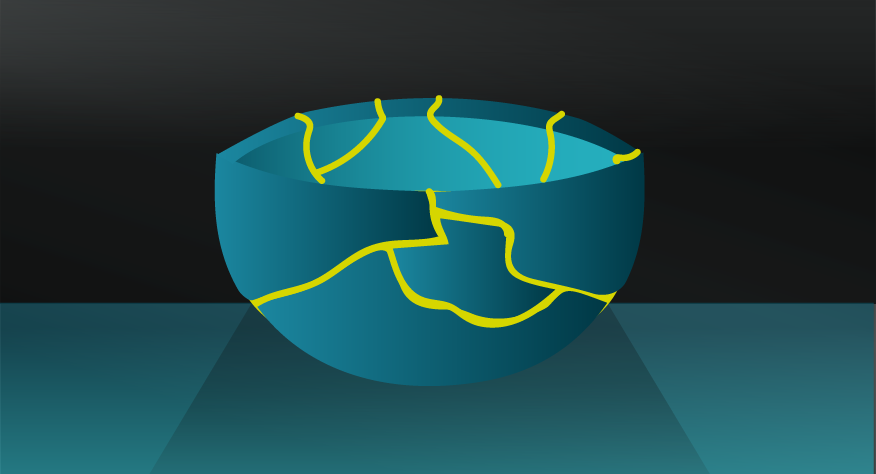

We'll be honest with you - this one sucks. You might notice that we tend to use the word ‘heuristic’ rather than ‘bias’ to describe mental shortcuts. They're all biases of a sort, but such a judgemental term tends to hide other potential payoffs. That said, if there was a heuristic that earns the ‘bias’ label with few redeeming features — it’s this one.

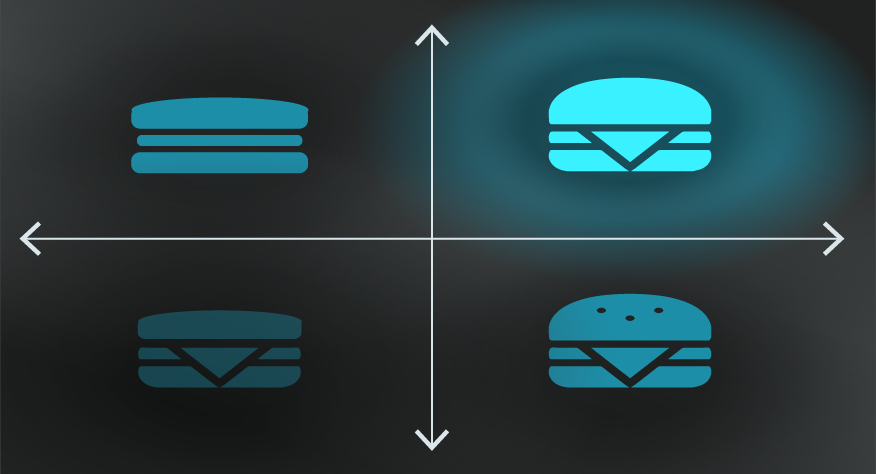

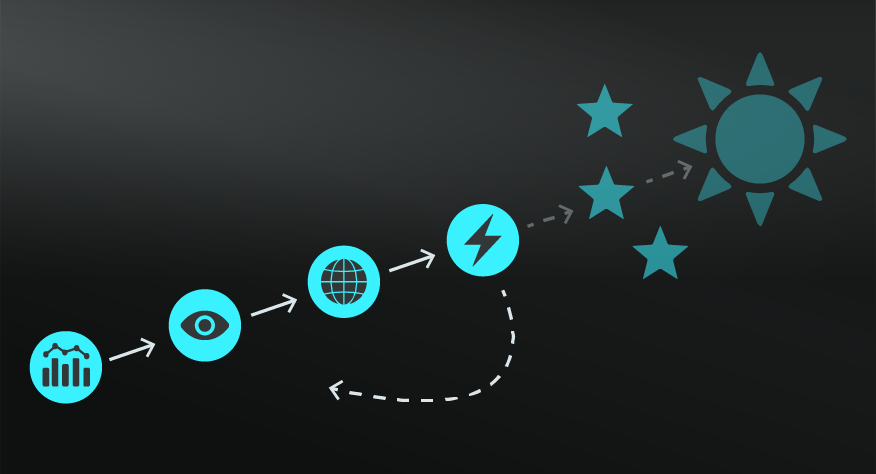

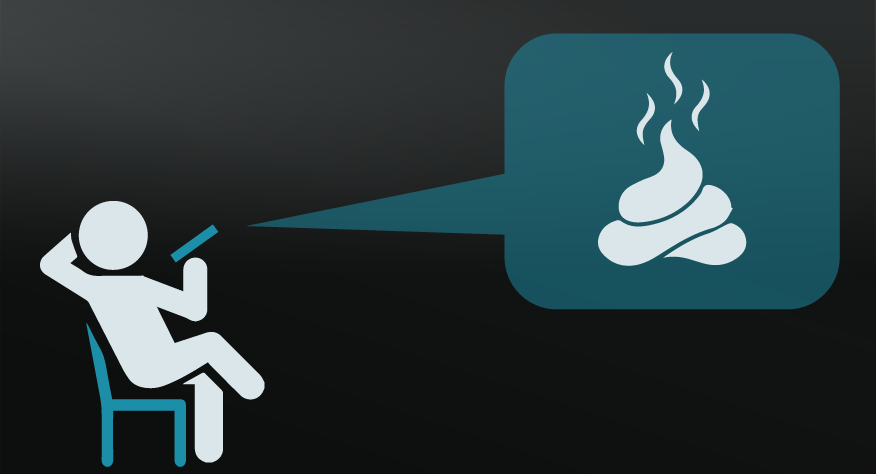

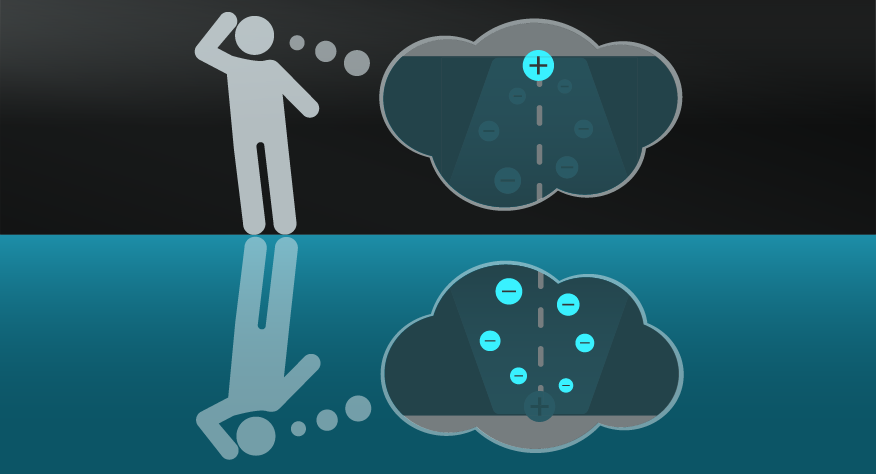

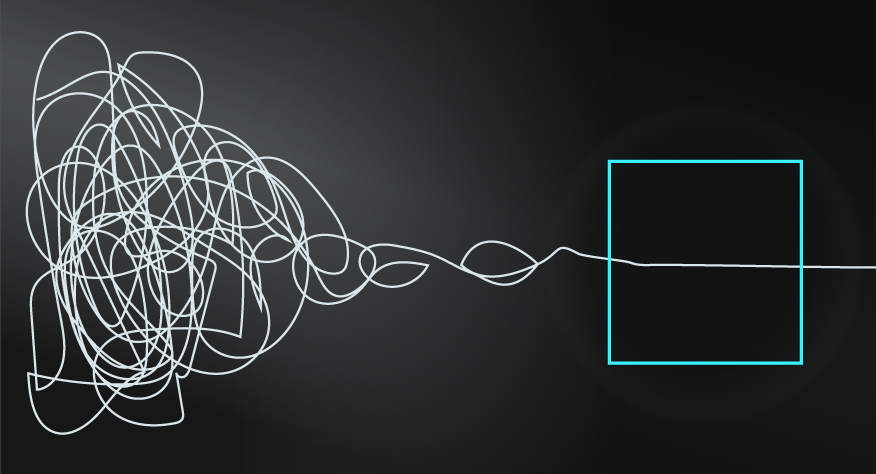

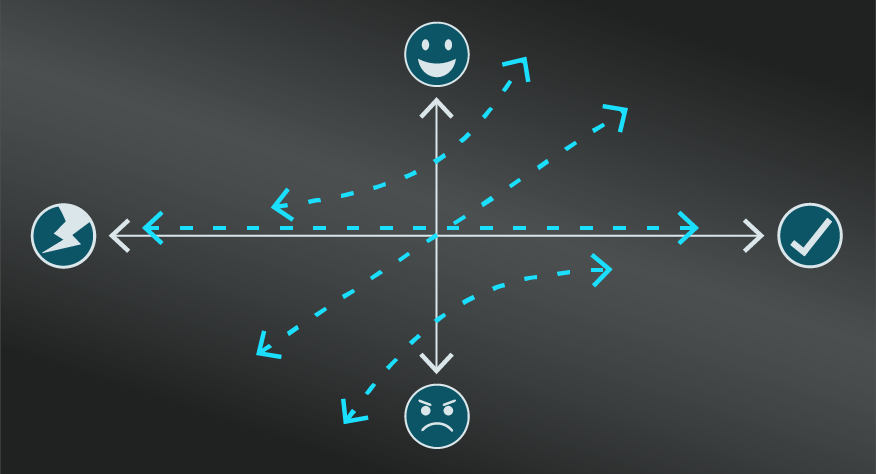

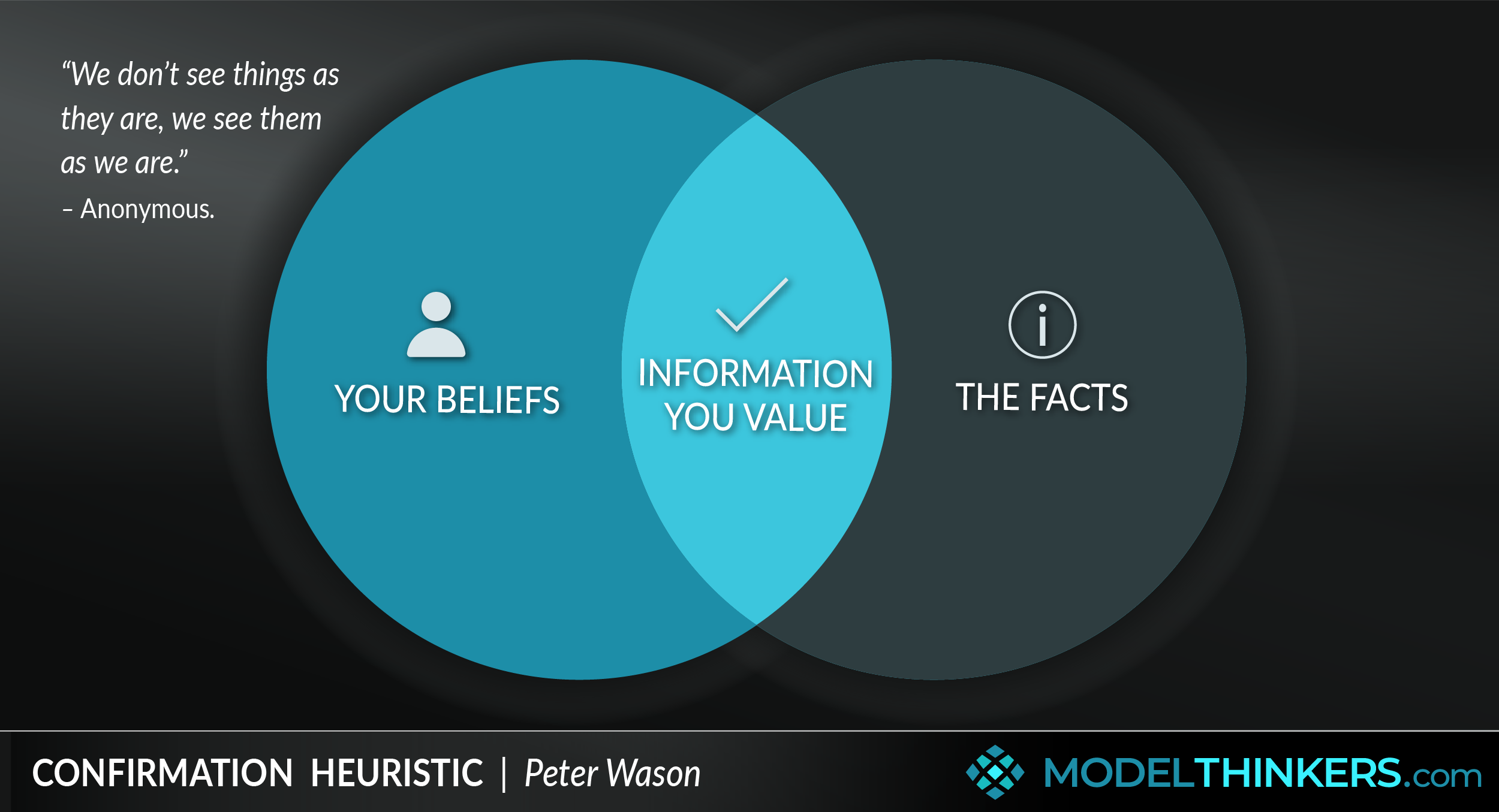

The Confirmation Heuristic leads you to seek out information that confirms your existing beliefs, mental models and hypotheses while discounting information that refutes them.

RATIONAL OR RATIONALISING?

The nature of this heuristic is captured in the anonymous quote, “We don’t see things as they are, we see them as we are.” Robert Heinlein went further when he observed, "Man (sic) is not a rational animal, he is a rationalising animal."

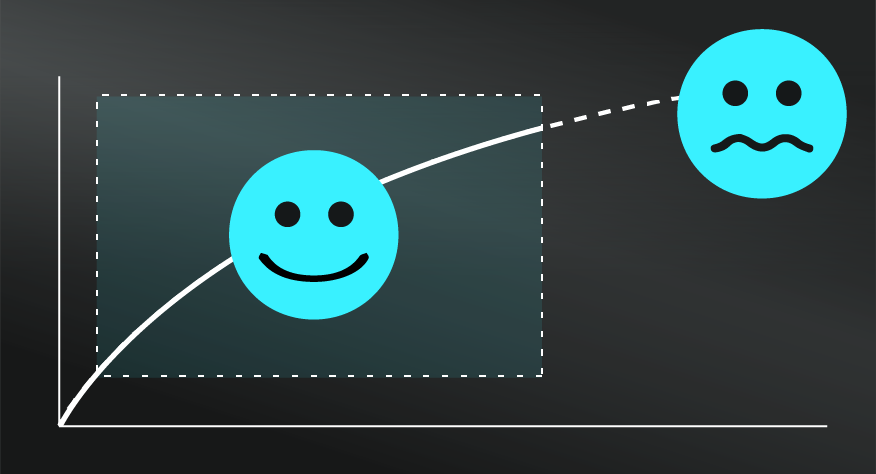

Also known as the Confirmation Bias, it is a commonly referenced model in behavioural economics and cognitive psychology. While it might be considered to be a useful filter to avoid overwhelm, it more commonly plays out as a negative bias that prevents you from effectively learning, adapting to change, and growing.

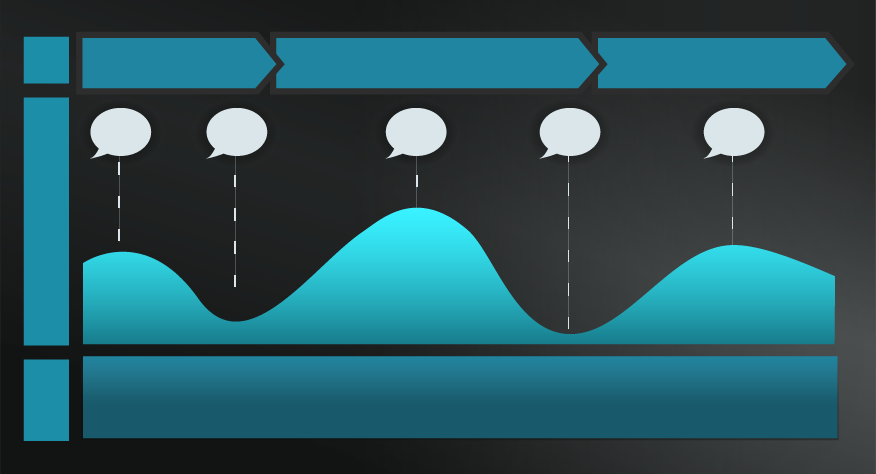

The Confirmation Heuristic will manifest in all aspects of our life, from how you view people around you; understand so-called 'objective facts' that you observe; interpret and direct your research; and the way you select and consume media and news.

IMPACT ON MEMORY.

Beyond the information consumption process, research has demonstrated that when you recall episodic memory, you will fill in information gaps using the Confirmation Heuristic, thus changing your memories to reinforce your beliefs and mental models. And you’ll do all this, even in the face of contradictory evidence.

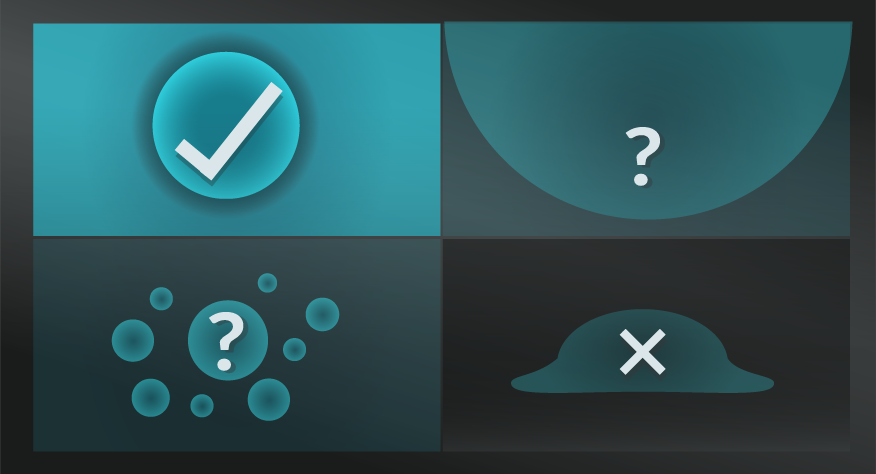

HOW TO INTERRUPT THIS BIAS.

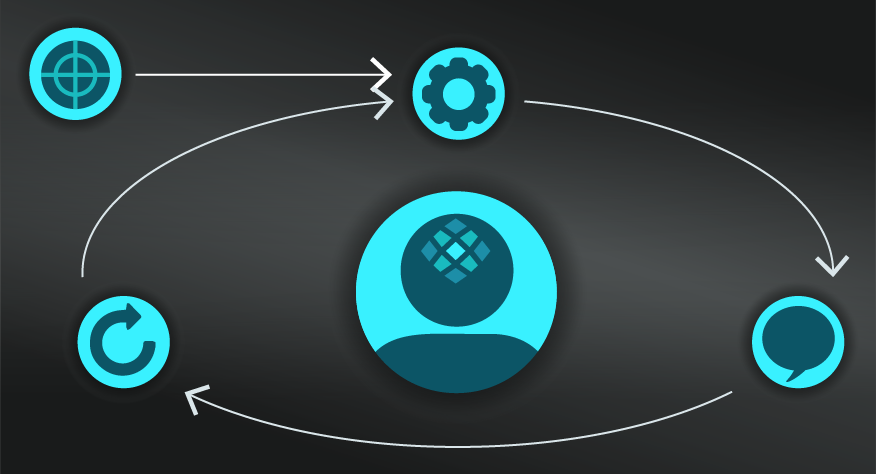

Similar to all heuristics, simply knowing about the Confirmation Heuristic will not prevent it from happening. Rather than stopping it, you're better off experimenting with these techniques to interrupt it:

- Habitually ask one of our favourite questions: ‘what would I see if I was wrong?’ to actively seek out counter-evidence;

- Imagine pursuing another option or argument, try to play out the implications and results to compare with your current approach and thinking;

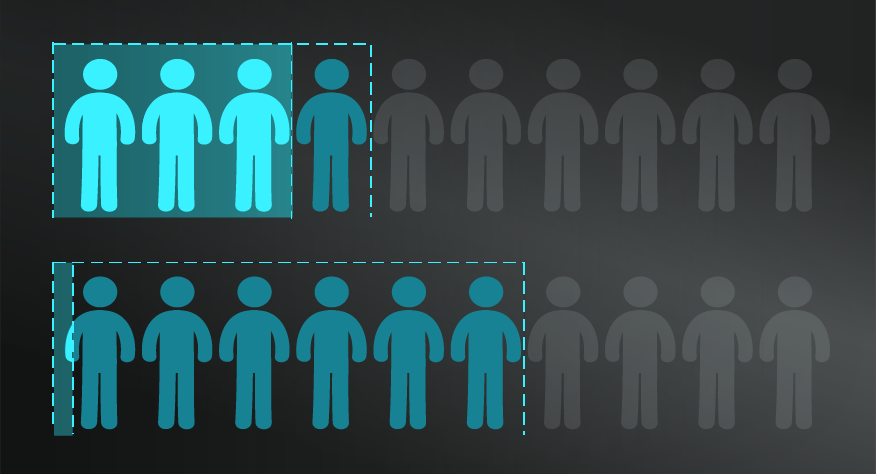

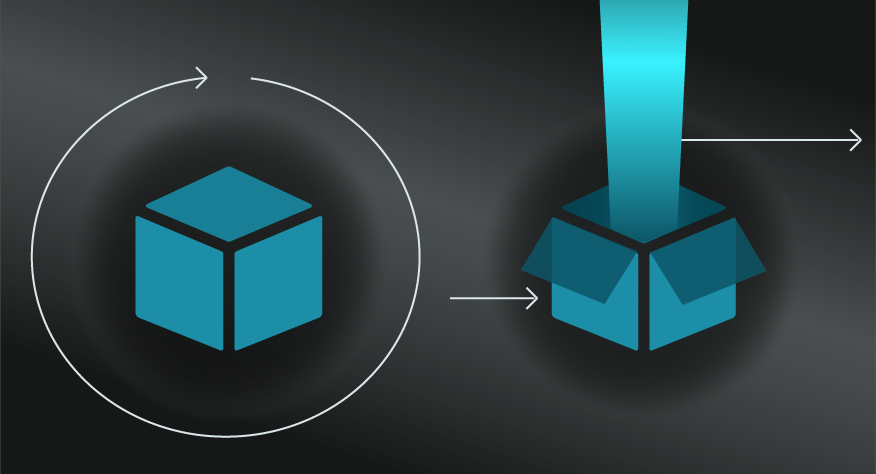

- Break out of your echo chamber by exposing yourself to more diverse views and perspectives, including working in diverse teams;

- Take a multi-perspective view with Munger's Latticework and apply several mental models to any situation;

- Apply the Scientific Method and treat your beliefs as hypotheses that need to be tested;

- Go back to basics and apply models such as First Principles and Occam's Razor to cut down on your assumptions.

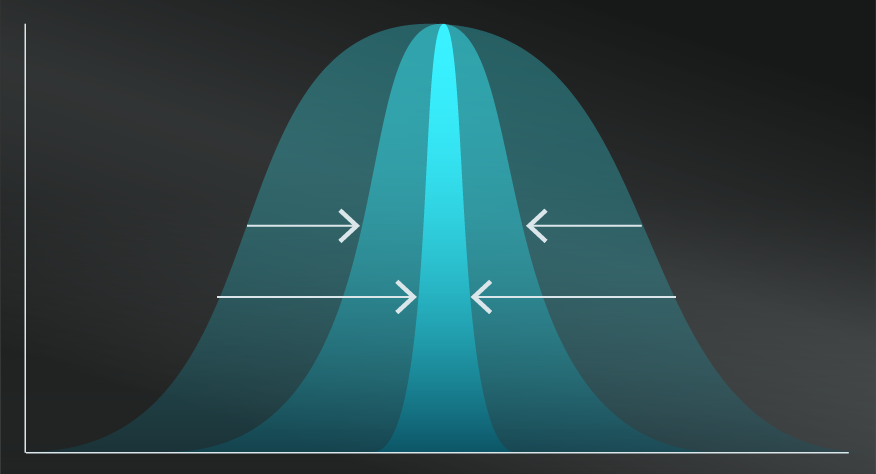

- Take a more complex and humble view of situations by embracing Probabilistic Thinking and remembering that the Map is Not the Territory.

See the Actionable Takeaways below for more.

IN YOUR LATTICEWORK.

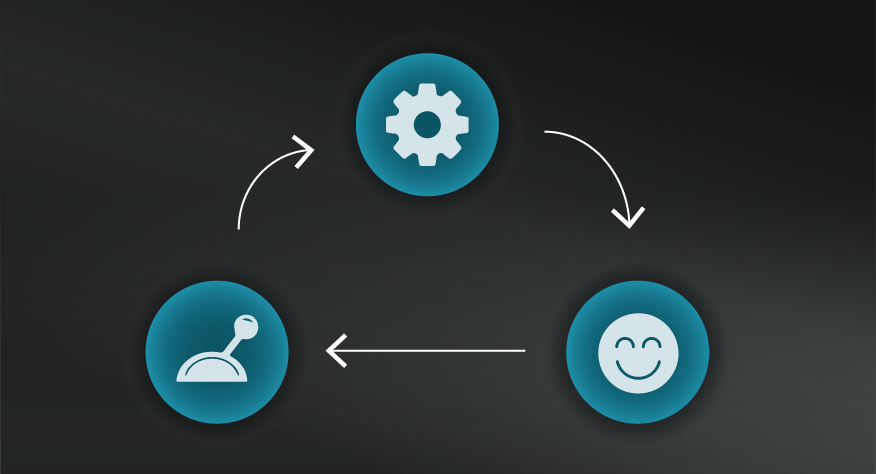

This is a core heuristic within behavioural economics and can be seen in the context of Fast and Slow Thinking. It helps to inform a range of other models such as the Correlation vs Causation, Anchoring Heuristic, Functional Fixedness and the Halo Effect.

- Resource yourself before trying to engage with counter views.

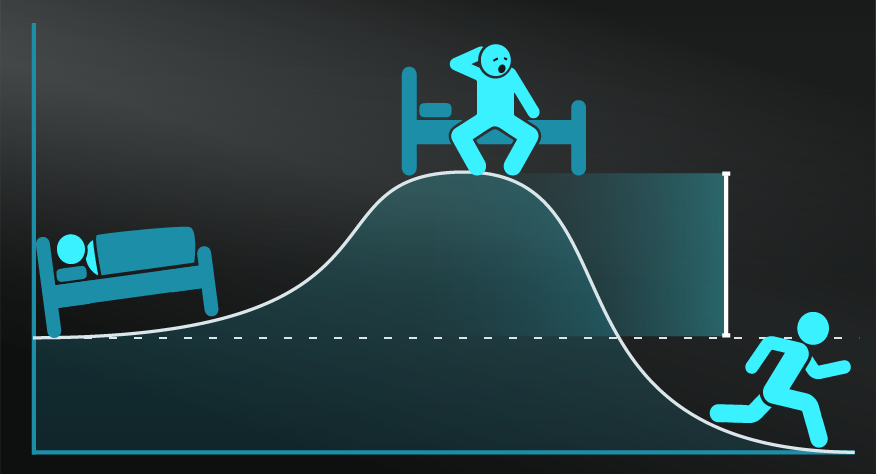

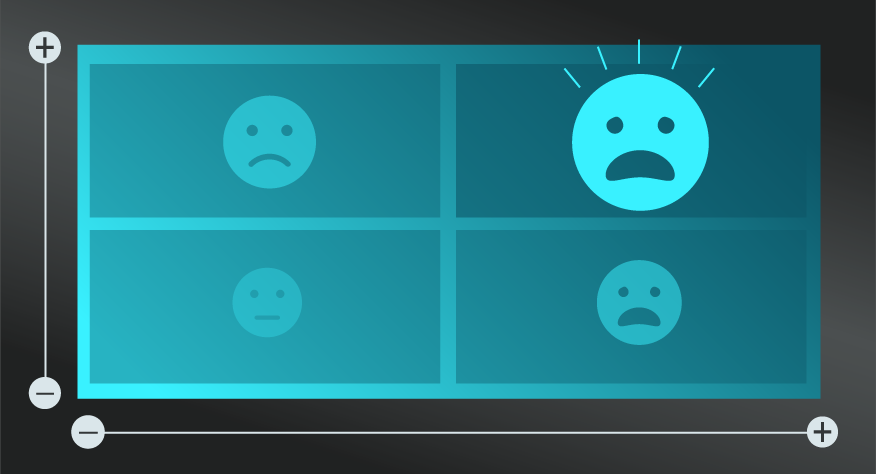

This is a heuristic, which means it’s a shortcut as outlined in the Fast and Slow thinking model here. Shortcuts like this have ease and currency in your mind, to interrupt it you’ll need to be resourced. That means well-rested, and feeling emotionally balanced with opportunities for focused, slow thinking. Anything less and you will (understandably) fall back on your heuristics rather than taking the effortful route of challenging your existing mental models.

- Interrupt and slow down.

As with most biases, to prevent them you need to rely on slow thinking. Slow the process down and draw on your cognitive muscle rather than relying on your easy heuristics.

- Avoid filter bubbles and echo chambers on social media.

The advent of social media means that you can place yourself in environments where your beliefs are constantly and repeatedly reinforced and amplified. Interrupt this by connecting with alternative voices.

- Leverage diverse teams.

Diverse teams, with a range of views, can help to interrupt confirmation bias through debate and discussions. Though see the first point above about being resourced to remain open to such discussions.

- Leverage diverse mental models.

The Latticework of Mental Models approach that ModelThinkers advocates, involves you developing a broad latticework rather than relying on a few habitual mental models. In doing so, you’ll be able to draw on a range of approaches, often contradictory ones, to understand and act. Part of this is learning how to ‘hold your mental models lightly’ so you can unlearn them, or at least put them aside, in the face of evidence and information.

- Ask, ‘what would I see if I was wrong?’

We love this question. It forces you to explore alternatives and try to falsify your current views and belief systems. It’s amazing what reveals itself when this question is truly pursued — again, it takes effort and for you to be resourced.

- Maintain scepticism.

Aim to maintain scepticism, even for information that makes sense to you or that you were expecting. Consider using models such as the CRAAP Test to dig deeper. And remain humble by embracing Probabilistic Thinking and remembering that the Map is Not the Territory.

- Experiment and test.

Use the Scientific Method and think in terms of hypotheses that need to be tested. Go further by striving to falsify your current hypothesis through experimentation and testing. You can also consider Split Tests to gather data on comparisons.

Is it ironic that we couldn’t find satisfactory arguments against the confirmation heuristic?

This limitation section has been included in every model’s description as an understanding that the Map vs Territory and that ‘all models are wrong, some of them might be useful’. That said, so far we’ve only been able to dig up supporting evidence of the confirmation heuristic with little counter-evidence. We’ll keep looking but, until then, perhaps our failure to find counter-arguments, is a testament to how convincing this model is?

This is everywhere.

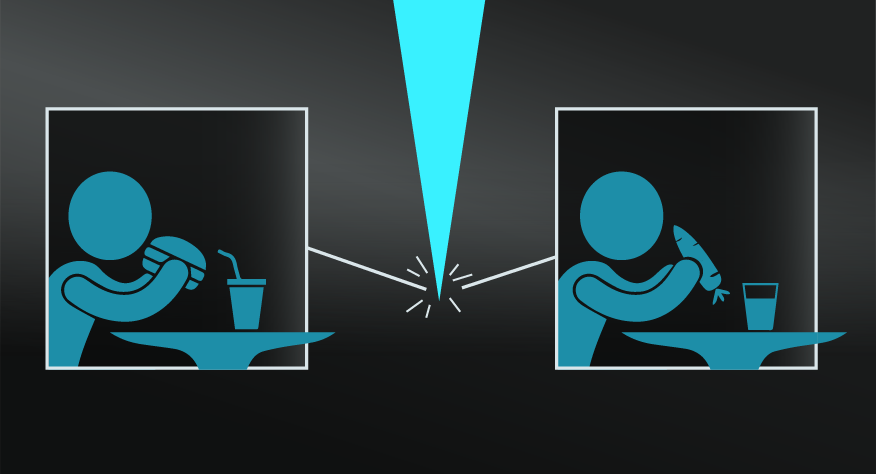

We couldn’t bring myself to list an exhaustive list of examples because it is so apparent, everywhere. See it play out when two groups, with opposing worldviews, pointing to the same event as vindication for their opinion. Or observe it in yourself by scrolling through Twitter for a few minutes and observe how you quickly sort the information you see based on your current beliefs. Consider the ‘box’ you put a workmate or family member in, only seeing the behaviour that reinforces your current mental models about them.

Beyond that, it’s been noted in eyewitness accounts of crimes and even scientific studies where researchers unconsciously sought out evidence to prove their hypothesis.

The confirmation heuristic is an incredibly common and sadly damaging model that would ideally be popularised along with strategies to interrupt it.

Use the following examples of connected and complementary models to weave the confirmation heuristic into your broader latticework of mental models. Alternatively, discover your own connections by exploring the category list above.

Connected models:

- Fast and slow thinking: the fundamental model for behavioural economics that underpins all heuristics.

- Munger’s latticework: in exploring a diverse range of mental models to counter the heuristic.

Complementary models:

- CRAAP test: test the information and sources you’re exploring.

- The scientific method: it can be diverted by confirmation heuristic but it’s another layer of rationality.

- Split testing: consider putting your idea to a test.

‘Confirmation Bias’ was coined by University College of London’s Peter Watson who ran an experiment in 1960 where participants were given a numerical task which demonstrated that, rather than taking a rational problem-solving approach, tested ideas that were based on their initial views and beliefs.

My Notes

My Notes

Oops, That’s Members’ Only!

Fortunately, it only costs US$5/month to Join ModelThinkers and access everything so that you can rapidly discover, learn, and apply the world’s most powerful ideas.

ModelThinkers membership at a glance:

“Yeah, we hate pop ups too. But we wanted to let you know that, with ModelThinkers, we’re making it easier for you to adapt, innovate and create value. We hope you’ll join us and the growing community of ModelThinkers today.”